Ed: What persuasive technologies might we routinely meet online? And how are they designed to guide us towards certain decisions?

There’s a broad spectrum, from the very simple to the very complex. A simple example would be something like Amazon’s “one-click” purchase feature, which compresses the entire checkout process down to a split-second decision. This uses a persuasive technique known as “reduction” to minimise the perceived cost to a user of going through with a purchase, making it more likely that they’ll transact. At the more complex end of the spectrum, you have the whole set of systems and subsystems that is online advertising. As it becomes easier to measure people’s behaviour over time and across media, advertisers are increasingly able to customise messages to potential customers and guide them down the path toward a purchase.

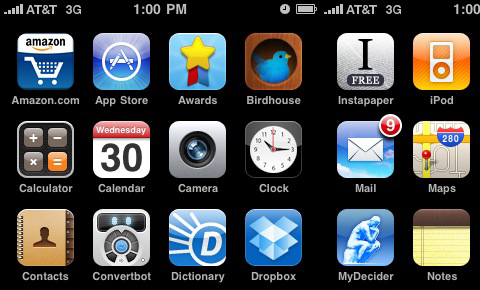

It isn’t just commerce, though: mobile behavior-change apps have seen really vibrant growth in the past couple years. In particular, health and fitness: products like Nike+, Map My Run, and Fitbit let you monitor your exercise, share your performance with friends, use social motivation to help you define and reach your fitness goals, and so on. One interesting example I came across recently is called “Zombies, Run!” which motivates by fright, spawning virtual zombies to chase you down the street while you’re on your run.

As one final example, If you’ve ever tried to deactivate your Facebook account, you’ve probably seen a good example of social persuasive technology: the screen that comes up saying, “If you leave Facebook, these people will miss you” and then shows you pictures of your friends. Broadly speaking, most of the online services we think we’re using for “free” — that is, the ones we’re paying for with the currency of our attention — have some sort of persuasive design goal. And this can be particularly apparent when people are entering or exiting the system.

Ed: Advertising has been around for centuries, so we might assume that we have become clever about recognizing and negotiating it — what is it about these online persuasive technologies that poses new ethical questions or concerns?

The ethical questions themselves aren’t new, but the environment in which we’re asking them makes them much more urgent. There are several important trends here. For one, the Internet is becoming part of the background of human experience: devices are shrinking, proliferating, and becoming more persistent companions through life. In tandem with this, rapid advances in measurement and analytics are enabling us to more quickly optimise technologies to reach greater levels of persuasiveness. That persuasiveness is further augmented by applying knowledge of our non-rational psychological biases to technology design, which we are doing much more quickly than in the design of slower-moving systems such as law or ethics. Finally, the explosion of media and information has made it harder for people to be intentional or reflective about their goals and priorities in life. We’re living through a crisis of distraction. The convergence of all these trends suggests that we could increasingly live our lives in environments of high persuasive power.

To me, the biggest ethical questions are those that concern individual freedom and autonomy. When, exactly, does a “nudge” become a “push”? When we call these types of technology “persuasive,” we’re implying that they shouldn’t cross the line into being coercive or manipulative. But it’s hard to say where that line is, especially when it comes to persuasion that plays on our non-rational biases and impulses. How persuasive is too persuasive? Again, this isn’t a new ethical question by any means, but it is more urgent than ever.

These technologies also remind us that the ethics of attention is just as important as the ethics of information. Many important conversations are taking place across society that deal with the tracking and measurement of user behaviour. But that information is valuable largely because it can be used to inform some sort of action, which is often persuasive in nature. But we don’t talk nearly as much about the ethics of the persuasive act as we do about the ethics of the data. If we did, we might decide, for instance, that some companies have a moral obligation to collect more of a certain type of user data because it’s the only way they could know if they were persuading a person to do something that was contrary to their well-being, values, or goals. Knowing a person better can be the basis not only for acting more wrongly toward them, but also more rightly.

As users, then, persuasive technologies require us to be more intentional about how we define and express our own goals. The more persuasion we encounter, the clearer we need to be about what it is we actually want. If you ask most people what their goals are, they’ll say things like “spending more time with family,” “being healthier,” “learning piano,” etc. But we don’t all accomplish the goals we have — we get distracted. The risk of persuasive technology is that we’ll have more temptations, more distractions. But its promise is that we can use it to motivate ourselves toward the things we find fulfilling. So I think what’s needed is more intentional and habitual reflection about what our own goals actually are. To me, the ultimate question in all this is how we can shape technology to support human goals, and not the other way around.

Ed: What if a persuasive design or technology is simply making it easier to do something we already want to do: isn’t this just ‘user centered design’? (ie a good thing?)

Yes, persuasive design can certainly help motivate a user toward their own goals. In these cases it generally resonates well with user-centered design. The tension really arises when the design leads users toward goals they don’t already have. User-centered design doesn’t really have a good way to address persuasive situations, where the goals of the user and the designer diverge.

To reconcile this tension, I think we’ll probably need to get much better at measuring people’s intentions and goals than we are now. Longer-term, we’ll probably need to rethink notions like “design” altogether. When it comes to online services, it’s already hard to talk about “products” and “users” as though they were distinct entities, and I think this will only get harder as we become increasingly enmeshed in an ongoing co-evolution.

Governments and corporations are increasingly interested in “data-driven” decision-making: isn’t that a good thing? Particularly if the technologies now exist to collect ‘big’ data about our online actions (if not intentions)?

I don’t think data ever really drives decisions. It can definitely provide an evidentiary basis, but any data is ultimately still defined and shaped by human goals and priorities. We too often forget that there’s no such thing as “pure” or “raw” data — that any measurement reflects, before anything else, evidence of attention.

That being said, data-based decisions are certainly preferable to arbitrary ones, provided that you’re giving attention to the right things. But data can’t tell you what those right things are. It can’t tell you what to care about. This point seems to be getting lost in a lot of the fervour about “big data,” which as far as I can tell is a way of marketing analytics and relational databases to people who are not familiar with them.

The psychology of that term, “big data,” is actually really interesting. On one hand, there’s a playful simplicity to the word “big” that suggests a kind of childlike awe where words fail. “How big is the universe? It’s really, really big.” It’s the unknown unknowns at scale, the sublime. On the other hand, there’s a physicality to the phrase that suggests an impulse to corral all our data into one place: to contain it, mould it, master it. Really, the term isn’t about data abundance at all – it reflects our grappling with a scarcity of attention.

The philosopher Luciano Floridi likens the “big data” question to being at a buffet where you can eat anything, but not everything. The challenge comes in the choosing. So how do you choose? Whether you’re a government, a corporation, or an individual, it’s your ultimate aims and values — your ethical priorities — that should ultimately guide your choosiness. In other words, the trick is to make sure you’re measuring what you value, rather than just valuing what you already measure.

James Williams is a doctoral student at the Oxford Internet Institute. He studies the ethical design of persuasive technology. His research explores the complex boundary between persuasive power and human freedom in environments of high technological persuasion.

James Williams was talking to blog editor Thain Simon.