Two concepts have recently emerged that invite us to rethink the relationship between children and digital technology: the “datafied child” (Lupton & Williamson, 2017) and children’s digital rights (Livingstone & Third, 2017). The concept of the datafied child highlights the amount of data that is being harvested about children during their daily lives, and the children’s rights agenda includes a response to ethical and legal challenges the datafied child presents.

Children have never been afforded the full sovereignty of adulthood (Cunningham, 2009) but both these concepts suggest children have become the points of application for new forms of power that have emerged from the digitisation of society. The most dominant form of this power is called “platform capitalism” (Srnicek, 2016). As a result of platform capitalism’s success, there has never been a stronger association between data, young people’s private lives, their relationships with friends and family, their life at school, and the broader political economy. In this post I will define platform capitalism, outline why it has come to dominate children’s relationship to the internet and suggest two reasons in particular why this is problematic.

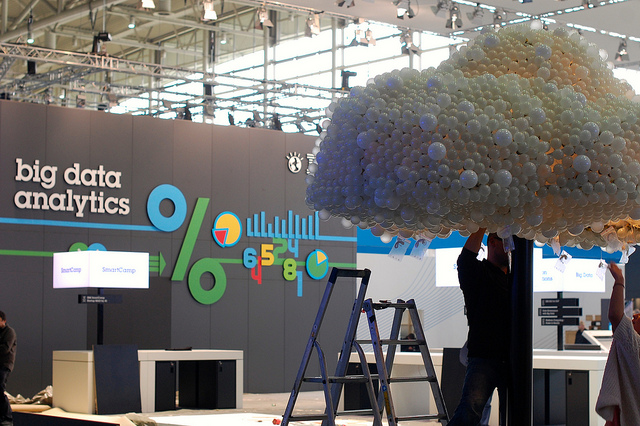

Children predominantly experience the Internet through platforms

‘At the most general level, platforms are digital infrastructures that enable two or more groups to interact. They therefore position themselves as intermediaries that bring together different users: customers, advertisers, service providers, producers, suppliers, and even physical objects’ (Srnicek 2016, p43). Examples of platforms capitalism include the technology superpowers – Google, Apple, Facebook, and Amazon. There are, however, many relevant instances of platforms that children and young people use. This includes platforms for socialising, platforms for audio-visual content, platforms that communicate with smart devices and toys, and platforms for games and sports franchises and platforms that provide services (including within in the public sector) that children or their parents use.

Young people choose to use platforms for play, socialising and expressing their identity. Adults have also introduced platforms into children’s lives: for example Capita SIMS is a platform used by over 80% of schools in the UK for assessment and monitoring (over the coming months at the Oxford Internet Institute we will be studying such platforms, including SIMS, for The Oak Foundation). Platforms for personal use have been facilitated by the popularity of tablets and smartphones.

Amongst the young, there has been a sharp uptake in tablet and smart phone usage at the expense of PC or laptop use. Sixteen per cent of 3-4 year olds have their own tablet, with this incidence doubling for 5-7 year olds. By the age of 12, smartphone ownership begins to outstrip tablet ownership (Ofcom, 2016). For our research at the OII, even when we included low-income families in our sample, 93% of teenagers owned a smartphone. This has brought forth the ‘appification’ of the web that Zittrain predicted in 2008. This means that children and young people predominately experience the internet via platforms that we can think of as controlled gateways to the open web.

Platforms exist to make money for investors

In public discourse some of these platforms are called social media. This term distracts us from the reason many of these publicly floated companies exist: to make money for their investors. It is only logical for all these companies to pursue the WeChat model that is becoming so popular in China. WeChat is a closed circuit platform, in that it keeps all engagements with the internet, including shopping, betting, and video calls, within its corporate compound. This brings WeChat closer to monopoly on data extraction.

Platforms have consolidated their success by buying out their competitors. Alphabet, Amazon, Apple, Facebook and Microsoft have made 436 acquisitions worth $131 billion over the last decade (Bloomberg, 2017). Alternatively, they just mimic the features of their competitors. For example, when Facebook acquired Instagram it introduced Stories, a feature use by Snapchat, which lets its users upload photos and videos as a ‘story’ (that automatically expires after 24 hours).

The more data these companies capture that their competitors are unable to capture, the more value they can extract from it and the better their business model works. It is unsurprising therefore that during our research we asked groups of teenagers to draw a visual representation of what they thought the world wide web and internet looked like – almost all of them just drew corporate logos (they also told us they had no idea that platforms such as Facebook own WhatsApp and Instagram, or that Google owns YouTube). Platform capitalism dominates and controls their digital experiences — but what provisions do these platforms make for children?

The General Data Protection Regulation (GDPR) (set to be implemented in all EU states, including the UK, in 2018) says that platforms collecting data about children below the age of 13 years shall only be lawful if and to the extent that consent is given or authorised by the child’s parent or custodian. Because most platforms are American-owned, they tend to apply a piece of Federal legislation known as COPPA; the age of consent for using Snapchat, WhatsApp, Facebook, and Twitter, for example, is therefore set at 13. Yet, the BBC found last year that 78% of children aged 10 to 12 had signed up to a platform, including Facebook, Instagram, Snapchat and WhatsApp.

Platform capitalism offloads its responsibilities onto the user

Why is this a problem? Firstly, because platform capitalism offloads any responsibility onto problematically normative constructs of childhood, parenting, and paternal relations. The owners of platforms assume children will always consult their parents before using their services and that parents will read and understand their terms and conditions, which, research confirms, in reality few users, children or adults, even look at.

Moreover, we found in our research many parents don’t have the knowledge, expertise, or time to monitor what their children are doing online. Some parents, for instance, worked night shifts or had more than one job. We talked to children who regularly moved between homes and whose estranged parents didn’t communicate with each other to supervise their children online. We found that parents who are in financial difficulties, or affected by mental and physical illness, are often unable to keep on top of their children’s digital lives.

We also interviewed children who use strategies to manage their parent’s anxieties so they would leave them alone. They would, for example, allow their parents to be their friends on Facebook, but do all their personal communication on other platforms that their parents knew nothing about. Often then the most vulnerable children offline, children in care for example, are the most vulnerable children online. My colleagues at the OII found 9 out of 10 of the teenagers who are bullied online also face regular ‘traditional’ bullying. Helping these children requires extra investment from their families, as well as teachers, charities and social services. The burden is on schools too to address the problem of fake news and extremism such as Holocaust denialism that children can read on platforms.

This is typical of platform capitalism. It monetises what are called social graphs: i.e. the networks of users who use its platforms that it then makes available to advertisers. Social graphs are more than just nodes and edges representing our social lives: they are embodiments of often intimate or very sensitive data (that can often be de-anonymised by linking, matching and combining digital profiles). When graphs become dysfunctional and manifest social problems such as abuse, doxxing, stalking, and grooming), local social systems and institutions — that are usually publicly funded — have to deal with the fall-out. These institutions are often either under-resourced and ill-equipped to these solve such problems, or they are already overburdened.

Are platforms too powerful?

The second problem is the ecosystems of dependency that emerge, within which smaller companies or other corporations try to monetise their associations with successful platforms: they seek to get in on the monopolies of data extraction that the big platforms are creating. Many of these companies are not wealthy corporations and therefore don’t have the infrastructure or expertise to develop their own robust security measures. They can cut costs by neglecting security or they subcontract out services to yet more companies that are added to the network of data sharers.

Again, the platforms offload any responsibility onto the user. For example, WhatsApp tells its users; “Please note that when you use third-party services, their own terms and privacy policies will govern your use of those services”. These ecosystems are networks that are only as strong as their weakest link. There are many infamous examples that illustrate this, including the so-called ‘Snappening’ where sexually explicit pictures harvested from Snapchat — a platform that is popular with teenagers — were released on to the open web. There is also a growing industry in fake apps that enable illegal data capture and fraud by leveraging the implicit trust users have for corporate walled gardens.

What can we do about these problems? Platform capitalism is restructuring labour markets and social relations in such a way that opting out from it is becoming an option available only to a privileged few. Moreover, we found teenagers whose parents prohibited them from using social platforms often felt socially isolated and stigmatised. In the real world of messy social reality, platforms can’t continue to offload their responsibilities on parents and schools.

We need some solutions fast because, by tacitly accepting the terms and conditions of platform capitalism – particularly when that they tell us it is not responsible for the harms its business model can facilitate – we may now be passing an event horizon where these companies are becoming too powerful, unaccountable, and distant from our local reality.

References

Hugh Cunningham (2009) Children and Childhood in Western Society Since 1500. Routledge.

Sonia Livingstone, Amanda Third (2017) Children and young people’s rights in the digital age: An emerging agenda. New Media and Society 19 (5).

Deborah Lupton, Ben Williamson (2017) The datafied child: The dataveillance of children and implications for their rights. New Media and Society 19 (5).

Nick Srnicek (2016) Platform Capitalism. Wiley.