Reports about the Facebook study ‘Experimental evidence of massive-scale emotional contagion through social networks’ have resulted in something of a media storm. Yet it can be predicted that ultimately this debate will result in the question: so what’s new about companies and academic researchers doing this kind of research to manipulate peoples’ behaviour? Isn’t that what a lot of advertising and marketing research does already – changing peoples’ minds about things? And don’t researchers sometimes deceive subjects in experiments about their behaviour? What’s new?

This way of thinking about the study has a serious defect, because there are three issues raised by this research: The first is the legality of the study, which, as the authors correctly point out, falls within Facebook users’ giving informed consent when they sign up to the service. Laws or regulation may be required here to prevent this kind of manipulation, but may also be difficult, since it will be hard to draw a line between this experiment and other forms of manipulating peoples’ responses to media. However, Facebook may not want to lose users, for whom this way of manipulating them via their service may ‘cause anxiety’ (as the first author of the study, Adam Kramer, acknowledged in a blog post response to the outcry). In short, it may be bad for business, and hence Facebook may abandon this kind of research (but we’ll come back to this later). But this – companies using techniques that users don’t like, so they are forced to change course – is not new.

The second issue is academic research ethics. This study was carried out by two academic researchers (the other two authors of the study). In retrospect, it is hard to see how this study would have received approval from an institutional review board (IRB), the boards at which academic institutions check the ethics of studies. Perhaps stricter guidelines are needed here since a) big data research is becoming much more prominent in the social sciences and is often based on social media like Facebook, Twitter, and mobile phone data, and b) much – though not all (consider Wikipedia) – of this research therefore entails close relations with the social media companies who provide access to these data, and to being able to experiment with the platforms, as in this case. Here, again, the ethics of academic research may need to be tightened to provide new guidelines for academic collaboration with commercial platforms. But this is not new either.

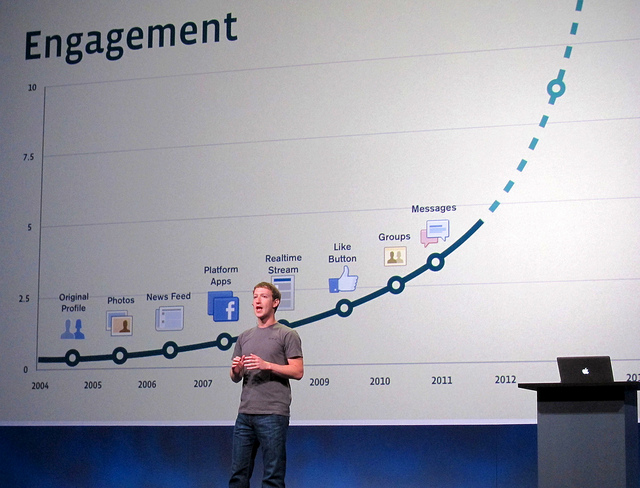

The third issue, which is the new and important one, is the increasing power that social research using big data has over our lives. This is of course even more difficult to pin down than the first two points. Where does this power come from? It comes from having access to data of a scale and scope that is a leap or step change from what was available before, and being able to perform computational analysis on these data. This is my definition of ‘big data’ (see note 1), and clearly applies in this case, as in other cases we have documented: almost 700000 users’ Facebook newsfeeds were changed in order to perform this experiment, and more than 3 million posts containing more than 122 million words were analysed. The result: it was found that more positive words in Facebook Newsfeeds led to more positive posts by users, and the reverse for negative words.

What is important here are the implications of this powerful new knowledge. To be sure, as the authors point, this was a study that is valuable for social science in showing that emotions may be transmitted online via words, not just in face-to-face situations. But secondly, it also provides Facebook with knowledge that it can use to further manipulate users’ moods; for example, making their moods more positive so that users will come to its – rather than a competitor’s – website. In other words, social science knowledge, produced partly by academic social scientists, enables companies to manipulate peoples’ hearts and minds.

This not the Orwellian world of the Snowden revelations about phone tapping that have been in the news recently. It’s the Huxleyan Brave New World where companies and governments are able to play with peoples’ minds, and do so in a way whereby users may buy into it: after all, who wouldn’t like to have their experience on Facebook improved in a positive way? And of course that’s Facebook’s reply to criticisms of the study: the motivation of the research is that we’re just trying to improve your experience, as Kramer says in his blogpost response cited above. Similarly, according to The Guardian newspaper, ‘A Facebook spokeswoman said the research…was carried out “to improve our services and to make the content people see on Facebook as relevant and engaging as possible”’. But improving experience and services could also just mean selling more stuff.

This is scary, and academic social scientists should think twice before producing knowledge that supports this kind of impact. But again, we can’t pinpoint this impact without understanding what’s new: big data is a leap in how data can be used to manipulate people in more powerful ways. This point has been lost by those who criticize big data mainly on the grounds of the epistemological conundrums involved (as with boy and Crawford’s widely cited paper, see note 2). No, it’s precisely because knowledge is more scientific that it enables more manipulation. Hence, we need to identify the point or points at which we should put a stop to sliding down a slippery slope of increasing manipulation of our behaviours. Further, we need to specify when access to big data on a new scale enables research that affects many people without their knowledge, and regulate this type of research.

Which brings us back to the first point: true, Facebook may stop this kind of research, but how would we know? And have academics therefore colluded in research that encourages this kind of insidious use of data? We can only hope for a revolt against this kind of Huxleyan conditioning, but as in Brave New World, perhaps the outlook is rather gloomy in this regard: we may come to like more positive reinforcement of our behaviours online…

Notes

1. Schroeder, R. 2014. ‘Big Data: Towards a More Scientific Social Science and Humanities?’, in Graham, M., and Dutton, W. H. (eds.), Society and the Internet. Oxford: Oxford University Press, pp.164-76.

2. boyd, D. and Crawford, K. (2012). ‘Critical Questions for big data: Provocations for a cultural, technological and scholarly phenomenon’, Information, Communication and Society, 15(5), 662-79.

Professor Ralph Schroeder has interests in virtual environments, social aspects of e-Science, sociology of science and technology, and has written extensively about virtual reality technology. He is a researcher on the OII project Accessing and Using Big Data to Advance Social Science Knowledge, which follows ‘big data’ from its public and private origins through open and closed pathways into the social sciences, and documents and shapes the ways they are being accessed and used to create new knowledge about the social world.